CroMo-Mixup: Augmenting Cross-Model Representations for Continual Self-Supervised Learning

Overview of Cromo-Mixup

Overview of Cromo-MixupAbstract

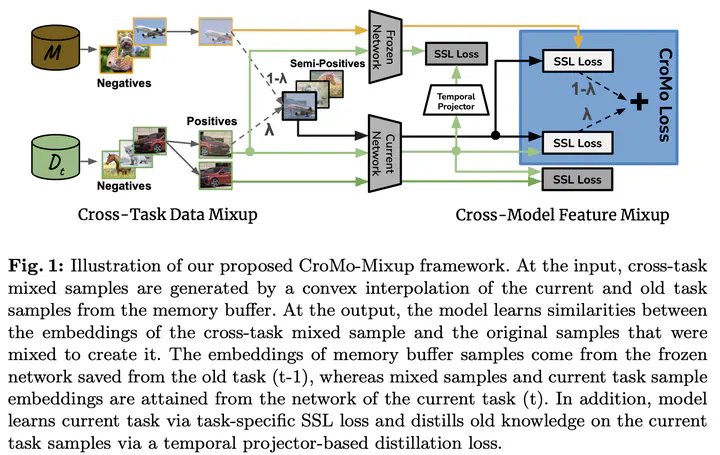

Continual self-supervised learning (CSSL) learns a series of tasks sequentially on the unlabeled data. Two main challenges of con- tinual learning are catastrophic forgetting and task confusion. While CSSL problem has been studied to address the catastrophic forgetting challenge, little work has been done to address the task confusion as- pect. In this work, we show through extensive experiments that self- supervised learning (SSL) can make CSSL more susceptible to the task confusion problem, particularly in less diverse settings of class incremen- tal learning because different classes belonging to different tasks are not trained concurrently. Motivated by this challenge, we present a novel cross-model feature Mixup (CroMo-Mixup) framework that addresses this issue through two key components: 1) Cross-Task data Mixup, which mixes samples across tasks to enhance negative sample diversity; and 2) Cross-Model feature Mixup, which learns similarities between embed- dings obtained from current and old models of the mixed sample and the original images, facilitating cross-task class contrast learning and old knowledge retrieval. We evaluate the effectiveness of CroMo-Mixup to improve both Task-ID prediction and average linear accuracy across all tasks on three datasets, CIFAR10, CIFAR100, and tinyImageNet under different class-incremental learning settings. We validate the compati- bility of CroMo-Mixup on four state-of-the-art SSL objectives. Code is available at https://github.com/ErumMushtaq/CroMo-Mixup.